A Community Update on Cortex Transcript Normalization: Accuracy, Transparency, and Our Continued Commitment to Applicants

As a physician, a former residency applicant, and co-founder of Thalamus, I know how personal and high-stakes this process feels, especially at the beginning of the residency recruitment season. Every grade, every interview, every decision carries enormous weight. The trust of students, schools, and programs means everything to us — and I want to take a moment to speak directly to that trust.

This is in follow-up to our blog post from October 6: Cortex Core Clerkship Grades and Transcript Normalization.

Recently, there has been online discussion regarding the Cortex transcript normalization tool. I want to clarify what happened, what we’ve learned, and most importantly how we’re moving forward together.

The short version: Cortex is now >99.3% accurate. The underlying Electronic Residency Application Service® (ERAS®) application data — including your official transcripts and Medical Student Performance Evaluations (MSPEs) — has never been affected, and there is no current evidence that applicants’ interview outcomes have been impacted. Only about 10% of residency programs have evaluated and selected applicants using Cortex so far this season.

What Happened

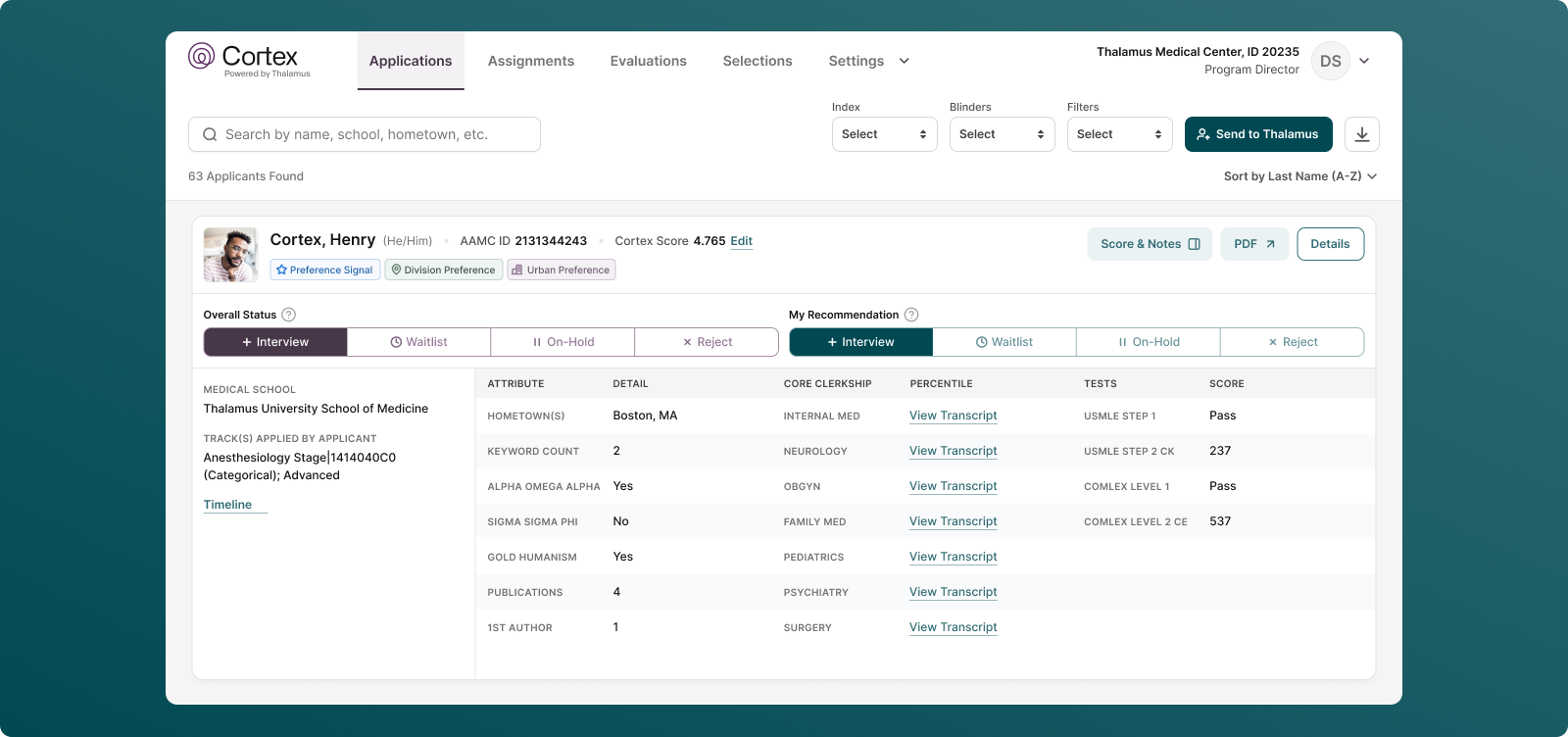

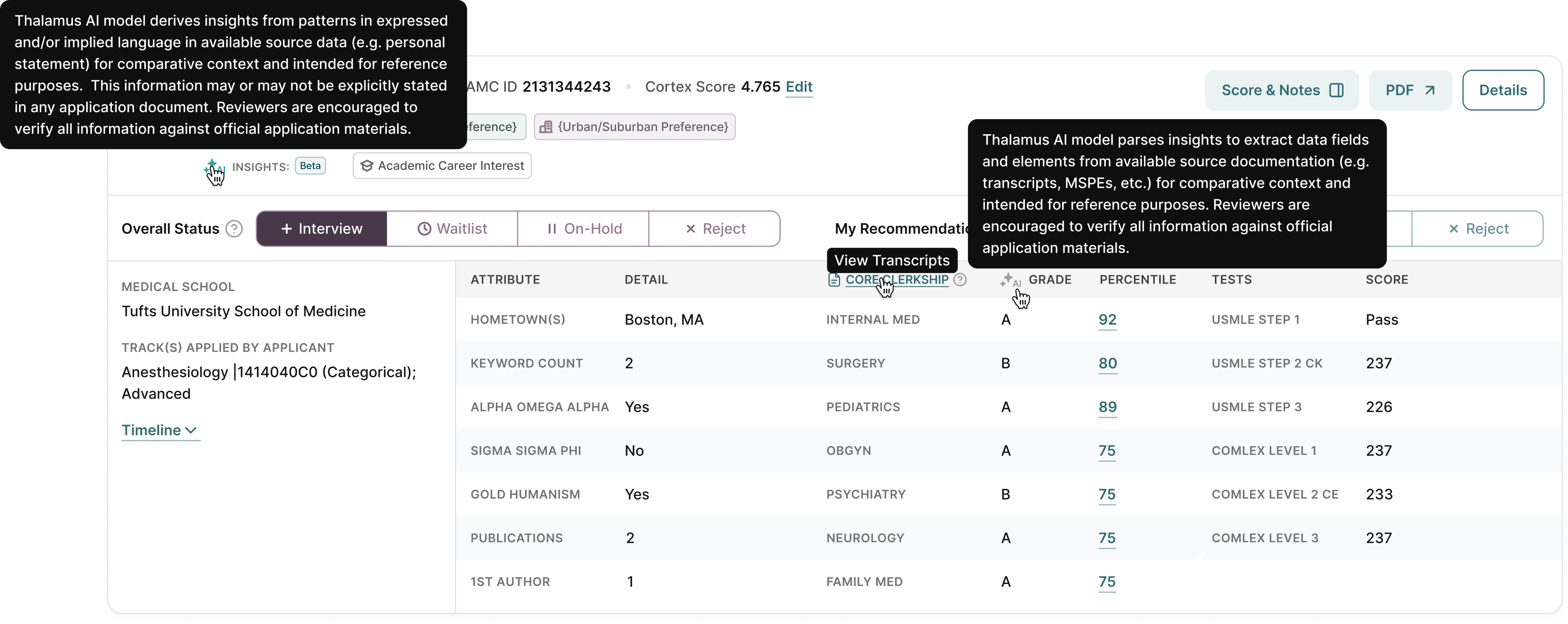

Because medical schools use more than 40 different grading scales and vastly different grade distributions, Cortex uses optical character recognition (OCR) and automated document processing to read and standardize grades from official medical school transcripts. This feature has been a key feature of Cortex since 2020, and accuracy this season, remains consistent with prior years. Overall, Cortex is now >99.3% accurate.

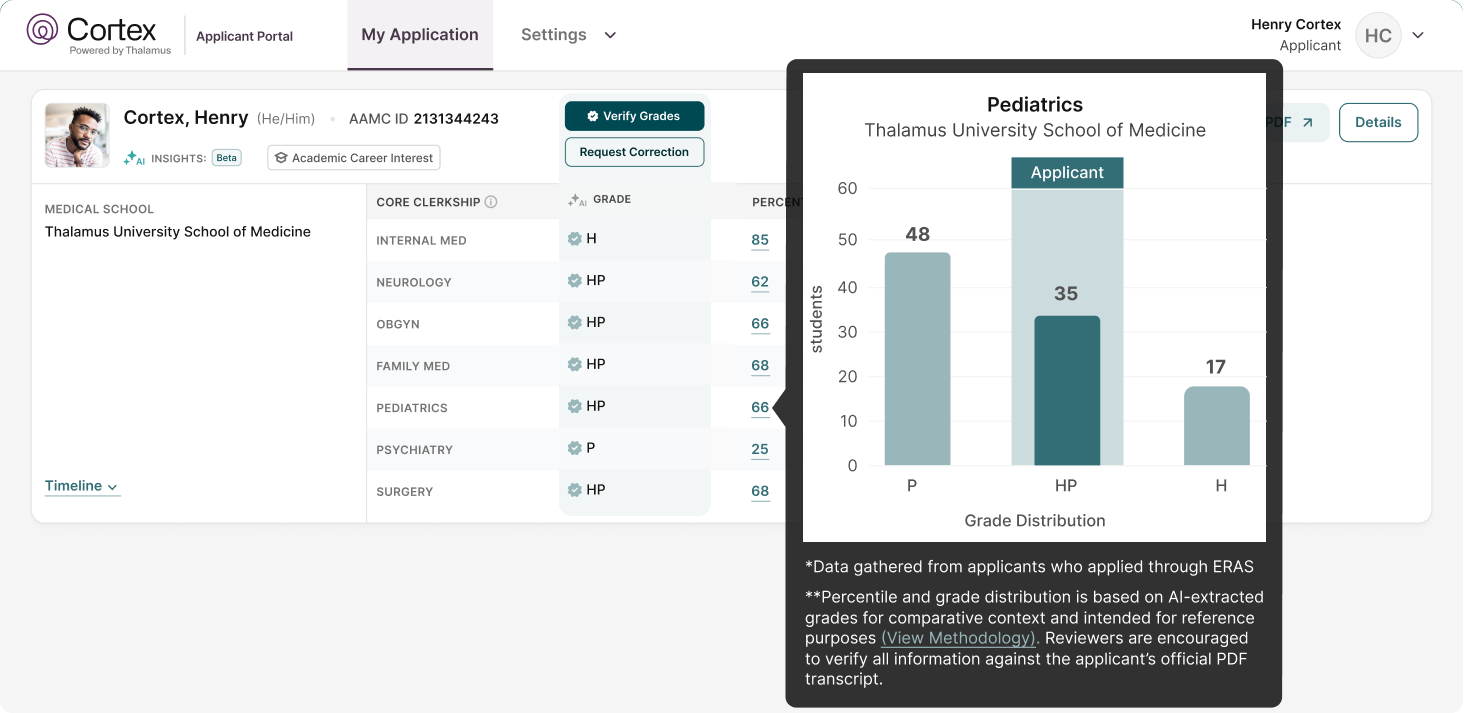

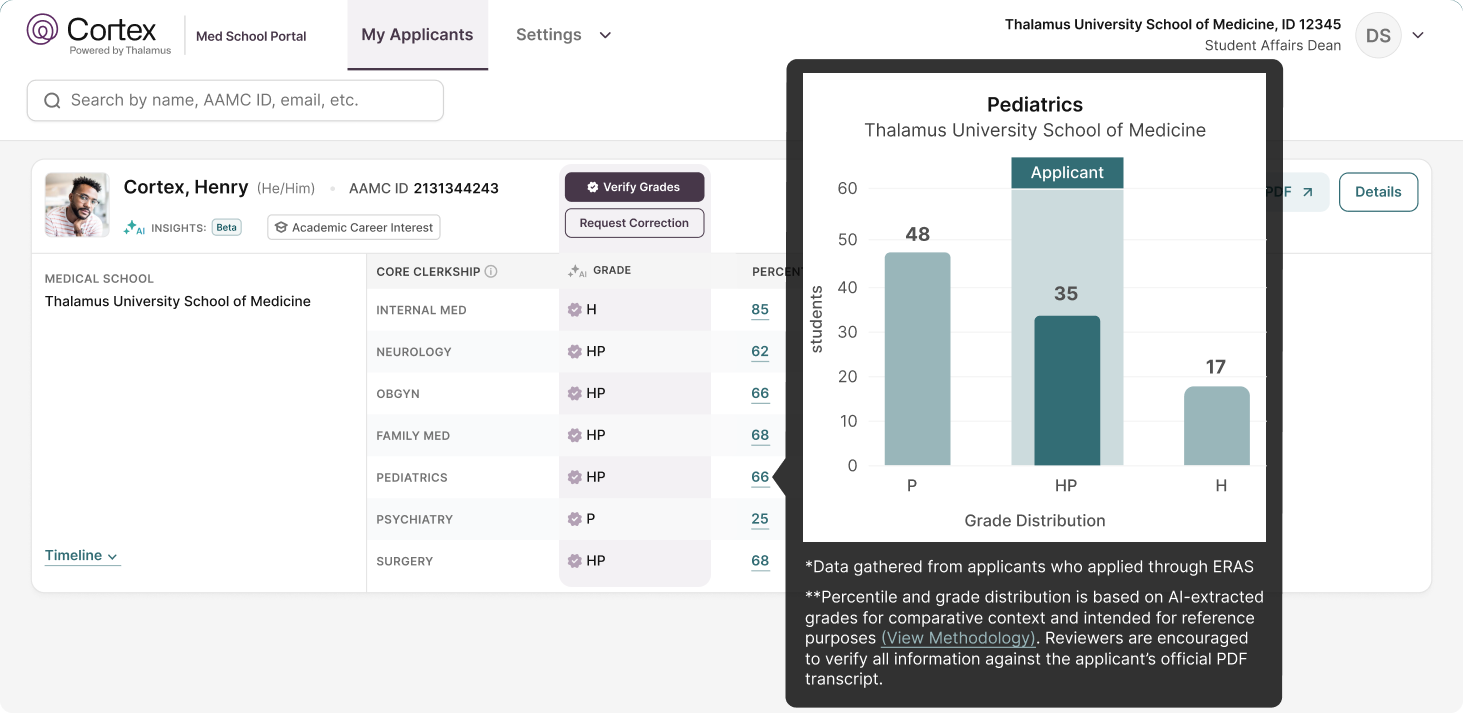

This normalization process helps programs fairly and consistently review applicants and their core clerkships in a standardized way, allowing for an apples-to-apples comparison of medical students across institutions. It clarifies differences in grading standards and levels the playing field for all applicants.

For example, the number of students receiving a grade of “honors” in a core clerkship across medical schools ranges between 0% for Pass/Fail, to 5% at the low end and 99.9% at the high end, with 30-40% for the average institution. Cortex provides percentiles so that program directors can identify these patterns in a more streamlined way to mitigate perceived grade inflation and provide a comparative context to evaluate applicants. The tool also identifies items from applicant source documentation (MSPEs, transcripts, etc.) that previously may have gone unnoticed.

At the beginning of this residency recruitment season, a small subset of extracted grades required either manual verification or correction due to text recognition variances caused by poor quality or misaligned orientation of documents. It is this pool of grade extractions that has caused concern in the community.

Importantly:

- All official transcripts and MSPEs have always been transmitted and displayed correctly in Cortex. The underlying data has not, and never was, altered.

- The issue was not caused by “AI hallucination,” but by OCR reading variances.

- When inaccuracies were reported or identified through either internal verification or via user outreach, they were immediately corrected.

To date, Thalamus has received just 10 reported inaccuracies out of over 4,000 customer inquiries this season. In every case, the reviewing faculty correctly identified the accurate grade when reviewing the official transcript and MSPE.

Additionally, because the tool being was offered complimentary to the community through the AAMC-Thalamus collaboration for the first time this year, many programs did not participate in the full onboarding process that has historically accompanied the purchase of Cortex. This meant that some programs were less familiar with the tool and, for example, thought the percentiles were supposed to match the MSPE (they were not designed to, as per the methodology).

As issues were raised by users, they were rapidly investigated and addressed. Unfortunately, a few well-intentioned communications from community members were shared by users in public forums without the full understanding of the impact or the underlying situation.

How Thalamus Responded for Accuracy

Thalamus has internal verification processes to assess each medical school’s precision, recall, and F1 score:

· Precision measures how corrected the extracted data are – in other words, when Cortex identifies a grade, how often is it right.

· Recall measures how complete the extraction is – how often Cortex correctly finds and captures every grade that actually appears on the transcript.

· F1 Score combines both precision and recall into a single measure of overall accuracy and consistency. It balances being correct (precision) with being complete (recall).

Prior to the start of the 2026 season, these verification processes identified numerous transcripts with low accuracy. As noted above, this was generally due to challenges with applying OCR to the source documents. As designed, these grades were never extracted or displayed in the Cortex user interface, and users were instructed to view the original transcript.

At the start of the season, Thalamus began to receive this year’s transcripts from applicants. We conducted additional automated validation internal processes in real-time to compare new transcript versions with our training set and removed transcripts with known errors, including manually reviewing a sample from each school. In addition, when Cortex users reported a potential inaccuracy, Thalamus immediately removed the extracted grade output from being displayed until all grades could be confirmed.

In the few reported instances, including those identified through internal Thalamus quality assurance processes, program directors and other faculty correctly identified the accurate grade when reviewing alongside the official transcript and/or MSPE.

In all instances, grades that were inaccurate were promptly corrected, and several that were reported as inaccurate were confirmed to have been accurate. In either case, the corrected or confirmed-accurate grades were displayed in the Cortex user interface.

How Thalamus Responded from a Technical Perspective

For those of you interested in a more detailed description of our response, Thalamus ran each impacted transcript through three different ChatGPT models (GPT-4o-mini, 4.1, 5-mini) and compared the output (which aligns with our established methodology). In parallel, the team accelerated the development of an additional data pipeline that extracts grades from MSPEs to augment the previously established pipeline for transcripts. Output from both was compared across the three ChatGPT models above.

A generalizable and representative validation data set of ~20,000 grades was also compared to the output of both transcripts and MSPEs for confirmation/validation. The more accurate output of the transcript vs. MSPE was used for display in Cortex. The combination of these efforts increased data accuracy to >99.3%.

Product enhancements added additional reference “tool tips” to provide context for the feature, including explicit labeling of extracted data within the user interface. User permissions were added for residency programs to toggle the visibility of the extracted grades, augmenting already existing functionality that enables programs to screen/hide selected data elements.

What This Means for Applicants

We recognize that any uncertainty around grades may cause anxiety — especially during interview season. But it’s important to put this into context.

- Faculty and program directors cannot filter, sort, search, or auto-reject applicants based on the extracted data.

- Only about 10% of residency programs have evaluated and selected applicants using Cortex so far this season.

The Cortex transcript normalization tool is not used in isolation. It was built as a reference tool to be used alongside all of your official application documents. The tool aligns with our philosophy that AI does not replace the need for human review but rather supplements it.

Based on over six years of user research observing how programs use Cortex, we know they rely upon your full application when making selection decisions as part of a broader holistic review process. The full corpus of your application is reviewed because programs evaluate not only grades, but also other academic and clinical performance in sub-internships and summary statements in MSPEs, and other elements across the full application.

Please be assured that we take the integrity and reliability of application data very seriously. In this instance, the data show that the likelihood of harm is extraordinarily low based on established and observed faculty review patterns, including requiring opening of the transcript or MSPE to understand performance in sub-internships and other clinical settings.

Why, How, and When We Notify Applicants

Some have asked why we didn’t email every applicant about this directly. The reason is simple:

We didn’t want to create unnecessary panic or confusion by rushing a broad communication to the community.

In making this decision, we weighed the fact that we had very few user-reported cases of inaccuracies. Where we received outreach and through our own internal quality assurance checks, we confirmed that the manual review of the source transcript displayed the correct information; and, finally, that we confirmed the program did not make decisions based on the extracted grade in the Cortex user interface (in isolation or otherwise), but instead replied upon the original transcript or MSPE.

Based on that, we prioritized direct outreach — verifying and correcting the few cases that were reported — while working with the AAMC, medical schools, and program leadership to communicate verified information through established, official channels.

In hindsight, it’s clear many students want more visibility into how their data is displayed. We apologize to those who believe that more widespread communication would have been helpful.

In those conversations with medical schools and program leadership, we heard the community’s call for a more public message. In response, we hosted a webinar with 190 representatives from medical schools on October 14. This provided schools with the opportunity to advocate for their students, ask questions, and then disseminate accurate information to students.

We are also planning a separate webinar in the coming week to provide the same opportunity for applicants (see below).

We hear your feedback, we appreciate it, and we’re acting on it.

What We’re Doing Next

Transparency and collaboration are how trust is rebuilt. We’re taking several concrete steps to make that happen:

- Improved collaboration with medical schools: We’re opening conversations with medical schools to discuss the possibility for direct data transmission of MSPEs and transcripts to Thalamus, eliminating the need to extract data from source documents. This mirrors the “digital” transmission of other application elements we receive from the AAMC ERAS program.

- AI Advisory Board: We’re forming a community-led board of students, medical schools, and residency programs to provide ongoing oversight, feedback, and governance for all AI-enabled tools at Thalamus. Email us at ai.collaboration@thalamusgme.com to express interest in joining the board or for more information.

- Open webinars: We’ll be hosting a live webinar for applicants to walk through how Cortex works, explain this year’s updates, and answer questions directly.

- Continuous data enhancement: We accelerated development of data pipelines to process MSPEs and are continuously expanding the manually reviewed dataset.

- Enhanced validation: Our verification processes now include expanded quality assurance steps prior to processing, multiple model comparisons across both transcripts and MSPEs, as well as human review to ensure accuracy and precision.

- Applicant & Medical School Portal: We’re building a new portal that will allow both applicants and schools to see how their data is displayed in Cortex — including OCR-extracted grades — and directly validate or confirm it. While these will not be available for this season, we are committed to having these rolled out for the 2027 ERAS season (beginning July 2026).

These steps are not just about fixing an isolated issue. They are our way of setting a higher bar for transparency, collaboration, and accountability in graduate medical education technology.

Our Commitment Moving Forward

At the end of the day, this is about trust — trust between students, schools, and programs, and trust that technology will be applied responsibly to create a more equitable transition to residency.

Thalamus was founded when I was a medical student, alongside my co-founder who is a program director. That lived experience continues to guide everything we do. We’ll keep listening, keep improving, and keep partnering with the community to make the system fairer, more transparent, and more human for everyone.

We’ve learned from this, and we’re using it to build something even stronger together.

Join the Conversation

We’ll be hosting a live webinar for applicants and medical school representatives to walk through the Cortex transcript normalization tool, share updates, and answer questions.

Time/Date TBD -- Please check back here on Friday, Oct. 17 for registration information.

Questions? Please contact us directly at customercare@thalamusgme.com

Thank you,

Jason

Jason Reminick, MD, MBA, MS

CEO and Founder, Thalamus

Explore our latest insights and updates.